(°0°)

(°0°)

(°0°)

(°0°)

noun words in Dashboard

Cluster

- namespace

Namespaces are a way to divide cluster resources between multiple users. It is not necessary to use multiple namespaces just to separate slightly different resources, such as different versions of the same software: use labels to distinguish resources within the same namespace.

Kubernetes starts with three initial namespaces:

default The default namespace for objects with no other namespace

kube-system The namespace for objects created by the Kubernetes system

kube-public This namespace is created automatically and is readable by all users (including those not authenticated). This namespace is mostly reserved for cluster usage, in case that some resources should be visible and readable publicly throughout the whole cluster. The public aspect of this namespace is only a convention, not a requirement.

kubectl api-resources –namespaced=false

kubectl api-resources –namespaced=true

Each namespace has its own:

resources - pods, services, replica sets, etc.

policies - who can or cannot perform actions in their community constraints - this community is allowed to run this many pods, etc.

-

nodes

A node is a worker machine in Kubernetes.

kubectl describe node -

persistent volume

Managing storage is a distinct problem from managing compute. The PersistentVolume subsystem provides an API for users and administrators that abstracts details of how storage is provided from how it is consumed. To do this we introduce two new API resources: PersistentVolume and PersistentVolumeClaim.

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes. It is a resource in the cluster just like a node is a cluster resource. PVs are volume plugins like Volumes, but have a lifecycle independent of any individual pod that uses the PV. This API object captures the details of the implementation of the storage, be that NFS, iSCSI, or a cloud-provider-specific storage system.

Lifecycle of a volume, Provisioning , Binding and Using -

roles/RBAC

RBAC uses the rbac.authorization.k8s.io API group to drive authorization decisions, allowing admins to dynamically configure policies through the Kubernetes API.

In the RBAC API, a role contains rules that represent a set of permissions.Permissions are purely additive (there are no “deny” rules). A role can be defined within a namespace with a Role, or cluster-wide with a ClusterRole. A role binding grants the permissions defined in a role to a user or set of users. It holds a list of subjects (users, groups, or service accounts), and a reference to the role being granted. Permissions can be granted within a namespace with a RoleBinding, or cluster-wide with a ClusterRoleBinding. -

storage class

A StorageClass provides a way for administrators to describe the “classes” of storage they offer. Different classes might map to quality-of-service levels, or to backup policies, or to arbitrary policies determined by the cluster administrators.

Each StorageClass contains the fields provisioner, parameters, and reclaimPolicy, which are used when a PersistentVolume belonging to the class needs to be dynamically provisioned.

Workloads

-

Cron jobs

One CronJob object is like one line of a crontab (cron table) file. It runs a job periodically on a given schedule, written in Cron format. -

Daemon Sets

A DaemonSet ensures that all (or some) Nodes run a copy of a Pod. As nodes are added to the cluster, Pods are added to them. As nodes are removed from the cluster, those Pods are garbage collected. Deleting a DaemonSet will clean up the Pods it created.

Some typical uses of a DaemonSet are:

running a cluster storage daemon, such as glusterd, ceph, on each node.

running a logs collection daemon on every node, such as fluentd or logstash.

running a node monitoring daemon on every node, such as Prometheus Node Exporter, Sysdig Agent, collectd, Dynatrace OneAgent, AppDynamics Agent, Datadog agent, New Relic agent, Ganglia gmond or Instana Agent.

DaemonSet : marking a node unschedulable doese not affect DaemonSet controller, DaemonSet with replicas on every node.

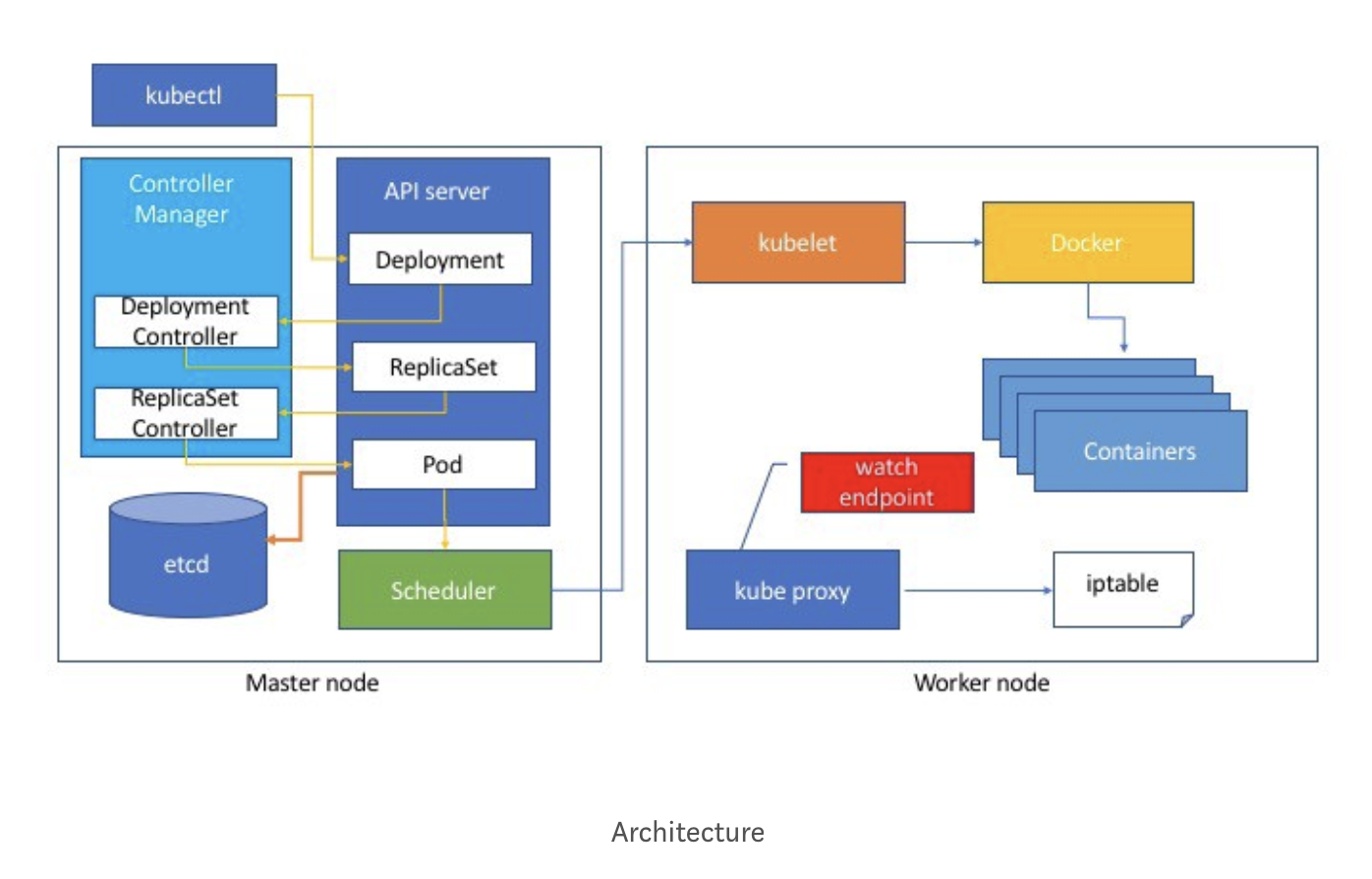

- Deployments & ReplicaSets

A Deployment controller provides declarative updates for Pods and ReplicaSets.

You describe a desired state in a Deployment, and the Deployment controller changes the actual state to the desired state at a controlled rate. You can define Deployments to create new ReplicaSets, or to remove existing Deployments and adopt all their resources with new Deployments.

Keys related to the Deployment kind

▪ replicas - defines the desired number of pods

▪ selector - specifies a label selector for the Pods targeted by this deployment

▪ Tells the Replica Set which pods to watch

▪ Assigning selectors that cause more than one RS to attempt to control a Pod is a user error

▪ K8s tries to avoid oscillation but can not always

▪ strategy

▪ type: Recreate or RollingUpdate (default)

▪ maxSurge: max number of pods that can be scheduled above the desired number of pods

▪ maxUnavailable: max number of pods that can be unavailable during the update

▪ minReadySeconds - optional field that specifies the minimum number of seconds for which a newly created Pod should be ready without any of its containers crashing, for it to be considered available

▪ revisionHistoryLimit - optional field that specifies the number of old ReplicaSets to retain to allow rollback

▪ template - defines a template to launch a new pod ▪ Contains the same elements defined for pod configs

start a new pod

wait for new pod ready

create endpoint and add it to lb/service

remove old pod endpoint and set it terminating

send SIGTERM to old pod and wait for terminationGracePeriodSeconds

kill old pod if waiting for more than terminationGracePeriodSeconds

- Rollouts

Rollouts are used to update a given set of Pods, the ones controlled by this Deployment’s replica set. It reports success when all the currently deployed Pods match what is expected in the current deployment. In k8s technical terms these conditions are all true:

.status.observedGeneration >= .metadata.generation .status.updatedReplicas == .spec.replicas .spec.availableReplicas >= minimum requiredkubectl apply -f appdeploy.yaml kubectl set image deploy/app \ mypod=app:2.9 --record kubectl rollout undo deploy/app kubectl rollout pause deploy/app kubectl rollout resume deploy/app kubectl rollout history deploy/app kubectl rollout status deploy/app -

Jobs

A Job creates one or more Pods and ensures that a specified number of them successfully terminate. As pods successfully complete, the Job tracks the successful completions. When a specified number of successful completions is reached, the task (ie, Job) is complete. Deleting a Job will clean up the Pods it created. -

Pods

A Pod (as in a pod of whales or pea pod) is a group of one or more containers (such as Docker containers), with shared storage/network, and a specification for how to run the containers.

The shared context of a Pod is a set of Linux namespaces, cgroups, and potentially other facets of isolation - the same things that isolate a Docker container.

Containers within a Pod share an IP address and port space, and can find each other via localhost. They can also communicate with each other using standard inter-process communications like SystemV semaphores or POSIX shared memory. Containers in different Pods have distinct IP addresses and can not communicate by IPC without special configuration. These containers usually communicate with each other via Pod IP addresses.They can also use shared volumes.

Pods Phases: Pending, Running, Succeeded, Failed and Unknow.

A Probe is a diagnostic performed periodically by the kubelet on a Container. To perform a diagnostic, the kubelet calls a Handler implemented by the Container. There are three types of handlers:

ExecAction: Executes a specified command inside the Container. The diagnostic is considered successful if the command exits with a status code of 0.

TCPSocketAction: Performs a TCP check against the Container’s IP address on a specified port. The diagnostic is considered successful if the port is open.

HTTPGetAction: Performs an HTTP Get request against the Container’s IP address on a specified port and path. The diagnostic is considered successful if the response has a status code greater than or equal to 200 and less than 400.

Each probe has one of three results:

Success: The Container passed the diagnostic.

Failure: The Container failed the diagnostic.

Unknown: The diagnostic failed, so no action should be taken.

The kubelet can optionally perform and react to two kinds of probes on running Containers:

livenessProbe(usually itself status): Indicates whether the Container is running. If the liveness probe fails, the kubelet kills the Container, and the Container is subjected to its restart policy. If a Container does not provide a liveness probe, the default state is Success.

readinessProbe(usually some other services it rely on): Indicates whether the Container is ready to service requests. If the readiness probe fails, the endpoints controller removes the Pod’s IP address from the endpoints of all Services that match the Pod. The default state of readiness before the initial delay is Failure. If a Container does not provide a readiness probe, the default state is Success. -

Replica Sets

A ReplicaSet’s purpose is to maintain a stable set of replica Pods running at any given time. As such, it is often used to guarantee the availability of a specified number of identical Pods.we recommend using Deployments instead of directly using ReplicaSets, unless you require custom update orchestration or don’t require updates at all. -

Replication Controllers

A ReplicationController ensures that a specified number of pod replicas are running at any one time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available. A ReplicationController is similar to a process supervisor, but instead of supervising individual processes on a single node, the ReplicationController supervises multiple pods across multiple nodes. - Stateful Sets

StatefulSet is the workload API object used to manage stateful applications. Manages the deployment and scaling of a set of Pods , and provides guarantees about the ordering and uniqueness of these Pods.

StatefulSets are valuable for applications that require one or more of the following.

Stable, unique network identifiers.

Stable, persistent storage.

Ordered, graceful deployment and scaling.

Ordered, automated rolling updates.

Discovery and Load Balancing

-

Ingress

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

An Ingress can be configured to give Services externally-reachable URLs, load balance traffic, terminate SSL / TLS, and offer name based virtual hosting. An Ingress controller is responsible for fulfilling the Ingress, usually with a load balancer, though it may also configure your edge router or additional frontends to help handle the traffic.

An Ingress does not expose arbitrary ports or protocols. Exposing services other than HTTP and HTTPS to the internet typically uses a service of type -

Services

An abstract way to expose an application running on a set of Pods as a network service. The set of Pods targeted by a Service is usually determined by a selector.

Config and Storage

-

Config Maps

ConfigMaps allow you to decouple configuration artifacts from image content to keep containerized applications portable.

The ConfigMap resource provides mechanisms to inject containers with configuration data while keeping containers agnostic of Kubernetes

ConfigMap restrictions

ConfigMaps must be created before they are consumed in pods. Controllers may be written to tolerate missing configuration data; consult individual components configured via ConfigMap on a case-by-case basis.

If ConfigMaps are modified or updated, any pods that use that ConfigMap may need to be restarted in order for the changes made to take effect.

ConfigMaps are namespaced resources, so they can only be referenced and mounted by pods residing in the same namespace.

Quota for ConfigMap size has not been implemented yet, but etcd does have a 1MB limit for objects stored within it.

Kubelet only supports use of ConfigMap for pods it gets from the API server. This includes any pods created using kubectl, or indirectly via a replica sets. It does not include pods created via the Kubelet’s –manifest-url flag, its –config flag, or its REST API (these are not common ways to create pods.) -

Persistent Volume Claims

A PersistentVolumeClaim (PVC) is a request for storage by a user. It is similar to a pod. Pods consume node resources and PVCs consume PV resources. Pods can request specific levels of resources (CPU and Memory). Claims can request specific size and access modes (e.g., can be mounted once read/write or many times read-only).

While PersistentVolumeClaims allow a user to consume abstract storage resources, it is common that users need PersistentVolumes with varying properties, such as performance, for different problems. Cluster administrators need to be able to offer a variety of PersistentVolumes that differ in more ways than just size and access modes, without exposing users to the details of how those volumes are implemented. -

Secrets

Kubernetes secret objects let you store and manage sensitive information, such as passwords, OAuth tokens, and ssh keys.

Metrics Server

The Metrics server was first released in September 2017 and is not installed automatically by all K8s installers. The Metrics Server does not run as a module within the Controller Manager, rather is runs as a stand alone deployment, typically in the kube-system namespace. The Metrics Server requires an SA with appropriate RBAC roles so that it can communicate with the api-server and extend the K8s API.

The Metrics Server must also be equipped with certificates/keys that will allow it to connect to each of the Kubelets in the system. Kubelets exposea /metrics endpoint,theMetricsServerscrapesthisendpointformetricsdataregularly,storingtheresultsinmemory.Whenin bound requests for metrics arrive at the api-server the metrics server is called to answer them.

kubectl top

command

kubectl refer

- force delete

refer

k8s baisc

k8snotes