MCP

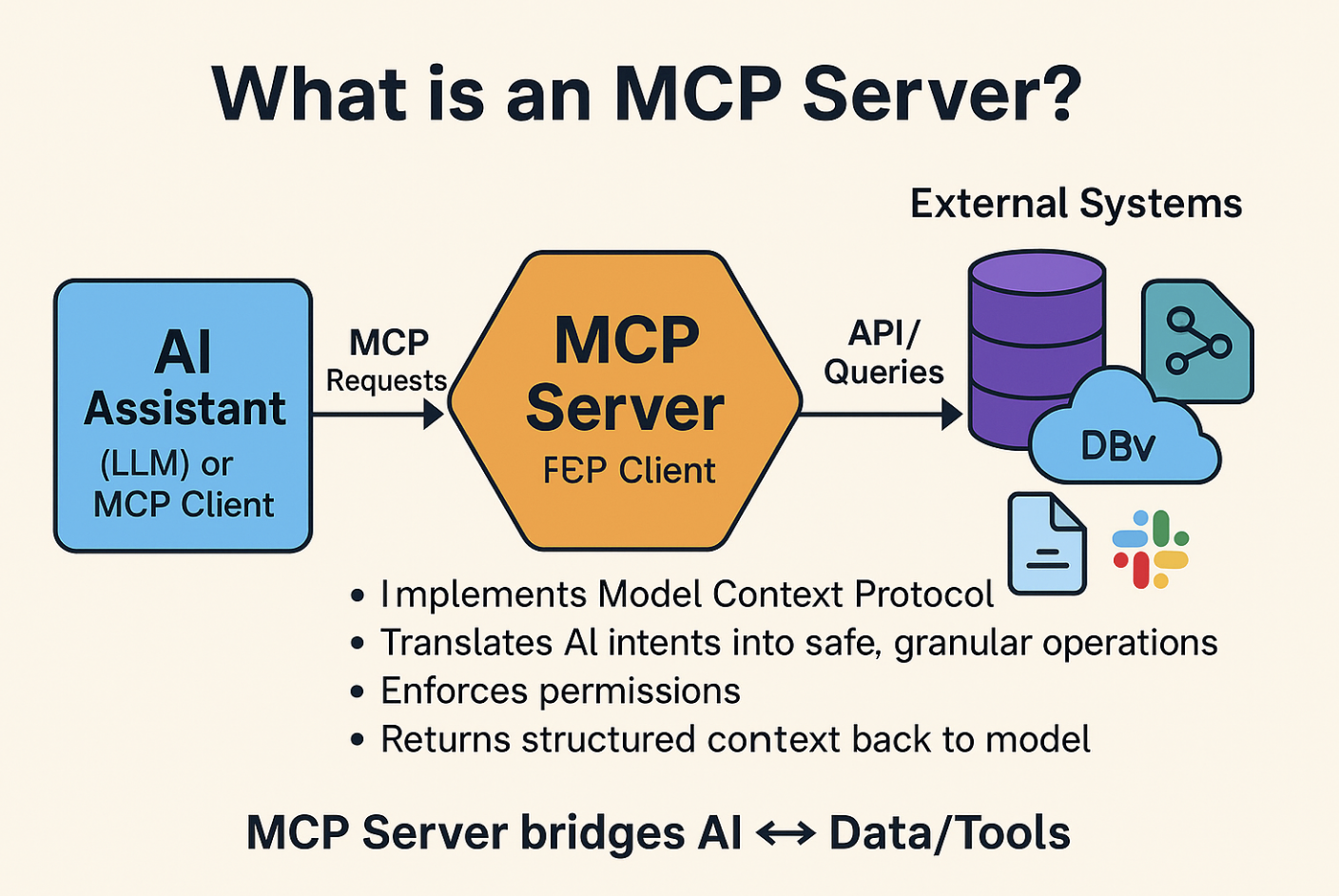

The Model Context Protocol (MCP) serves as a standardization layer for AI applications to communicate effectively with external services such as tools, databases and predefined templates.

- MCP host

- MCP client

- MCP server

AI Agent

An AI agent is a system or program that is capable of autonomously performing tasks on behalf of a user or another system. It performs them by designing its workflow and by using available tools. Multiagent systems consist of multiple AI agents working collectively to perform tasks on behalf of a user or another system.

MCP server and its benefit

reference

(°0°)

(°0°)

Key Benefits of Model Context Protocol (MCP)

- Interoperability and Standardization:

MCP creates a single, standardized protocol for AI systems to connect with various data sources and tools, moving away from custom, fragmented integrations. - Reduced Vendor Lock-in:

By promoting open standards, MCP helps AI systems become more fungible, allowing for greater flexibility and reducing reliance on specific vendors, according to Medium. - Faster AI Project Scaling:

A unified connection model makes it easier and quicker to scale AI-powered applications across multiple data sources and systems, notes Thoughtworks. - Reduced Development and Maintenance Burden:

Developers can build AI applications more quickly and with less complexity, as MCP reduces the need for custom connections for each new AI model and external system, according to the Model Context Protocol. - Enhanced AI Capabilities:

Models can access up-to-date information, reducing knowledge cutoffs and hallucinations by integrating factual data from reliable sources. - Personalization and Specialized Knowledge:

MCP enables models to access user-specific information and tap into domain-specific knowledge bases, leading to more personalized and expert-level responses. - Improved Contextual Understanding:

By providing real-time access to relevant, current, and organization-specific information, MCP allows AI models to develop a deeper understanding of context, leading to more accurate and relevant responses. - Less Redundant Information Processing:

MCP manages context efficiently by maintaining state between interactions and storing relevant information in a structured way, preventing the need to reprocess information. - Increased Trust and Transparency:

Implementations of MCP often include attribution for information sources, providing clear insights into the origin of the AI’s knowledge and increasing user trust. - Reduced “Context Switching Tax”:

The standardized interface provided by MCP minimizes the performance degradation that occurs when AI models switch between different information sources or tasks, leading to more coherent and consistent responses.

MCP workflow example

(°0°)

(°0°)

Let’s break down how it works:

1️⃣ Prompt Ingestion – It all begins with a user prompt.

2️⃣ Tool Discovery – The MCP Host fetches the right metadata about all available tools from the MCP Server.

3️⃣ Planning Phase – The client sends a structured combination of prompt and tool metadata to the LLM, letting it reason and select the best tool.

4️⃣ Tool Execution – Specific tools (code, APIs, DBs, etc.) are invoked by the client.

5️⃣ Context Update – The result from the tool is sent back with the prompt to maintain continuity.

6️⃣ LLM Final Output – A smart, fully informed response is generated and delivered to the user.