Best Article for K8s network

awesome article

netowrk training

-

Container-to-Container networking

In terms of Docker constructs, a Pod is modelled as a group of Docker containers that share a network namespace. Containers within a Pod all have the same IP address and port space assigned through the network namespace assigned to the Pod, and can find each other via localhost since they reside in the same namespace. We can create a network namespace for each Pod on a virtual machine. -

Pod-to-Pod networking

In Kubernetes, every Pod has a real IP address and each Pod communicates with other Pods using that IP address.

On same node:

namespaces can be connected using a Linux Virtual Ethernet Device or veth pair consisting of two virtual interfaces that can be spread over multiple namespaces. To connect Pod namespaces, we can assign one side of the veth pair to the root network namespace, and the other side to the Pod’s network namespace.

On different node:

Generally, every Node in your cluster is assigned a CIDR block specifying the IP addresses available to Pods running on that Node. Once traffic destined for the CIDR block reaches the Node it is the Node’s responsibility to forward traffic to the correct Pod.

When the CNI plugin is deployed to the cluster, each Node (EC2 instance) creates multiple elastic network interfaces and allocates IP addresses to those instances, forming a CIDR block for each Node. When Pods are deployed, a small binary deployed to the Kubernetes cluster as a DaemonSet receives any requests to add a Pod to the network from the Nodes local kubelet process. This binary picks an available IP address from the Node’s available pool of ENIs and assigns it to the Pod by wiring the virtual Ethernet device and bridge within the Linux kernel as described when networking Pods within the same Node. With this in place, Pod traffic is routeable across Nodes within the cluster.

- Pod-to-Service networking

A Kubernetes Service manages the state of a set of Pods, allowing you to track a set of Pod IP addresses that are dynamically changing over time. Services act as an abstraction over Pods and assign a single virtual IP address to a group of Pod IP addresses. Any traffic addressed to the virtual IP of the Service will be routed to the set of Pods that are associated with the virtual IP.

In Kubernetes, iptables rules are configured by the kube-proxy controller that watches the Kubernetes API server for changes. When a change to a Service or Pod updates the virtual IP address of the Service or the IP address of a Pod, iptables rules are updated to correctly route traffic directed at a Service to a backing Pod. The iptables rules watch for traffic destined for a Service’s virtual IP and, on a match, a random Pod IP address is selected from the set of available Pods and the iptables rule changes the packet’s destination IP address from the Service’s virtual IP to the IP of the selected Pod. As Pods come up or down, the iptables ruleset is updated to reflect the changing state of the cluster. Put another way, iptables has done load-balancing on the machine to take traffic directed to a service’s IP to an actual pod’s IP.

On the return path, the IP address is coming from the destination Pod. In this case iptables again rewrites the IP header to replace the Pod IP with the Service’s IP so that the Pod believes it has been communicating solely with the Service’s IP the entire time.

The latest release (1.11) of Kubernetes includes a second option for in-cluster load balancing: IPVS. IPVS (IP Virtual Server) is also built on top of netfilter and implements transport-layer load balancing as part of the Linux kernel.IPVS can direct requests for TCP- and UDP-based services to the real servers, and make services of the real servers appear as virtual services on a single IP address. This makes IPVS a natural fit for Kubernetes Services.

- Internet-to-Service networking

Egress — Routing traffic to the Internet

With an Internet gateway in place, VMs are free to route traffic to the Internet. Unfortunately, there is a small problem. Pods have their own IP address that is not the same as the IP address of the Node that hosts the Pod,

and the NAT translation at the Internet gateway only works with VM IP addresses because it does not have any knowledge about what Pods are running on which VMs — the gateway is not container aware. Let’s look at how Kubernetes solves this problem using iptables (again). Modify source ip

Ingress — Routing Internet traffic to Kubernetes

Ingress is divided into two solutions that work on different parts of the network stack: (1) a Service LoadBalancer and (2) an Ingress controller.

lb,ingress,dns

ingress

bgp

dns

debugging network

refer

(°0°)

(°0°)

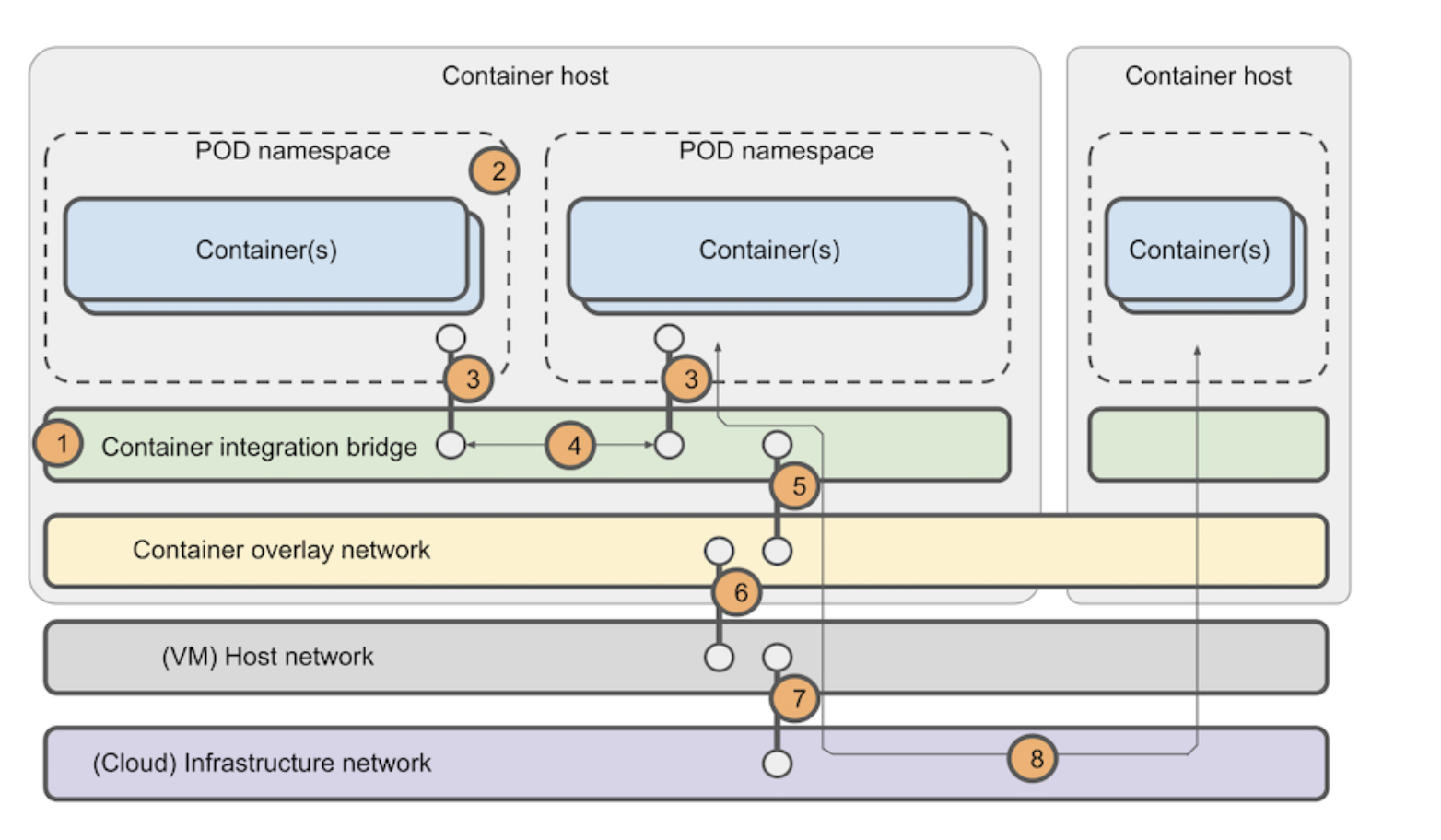

- A container integration bridge is created initially on the container host system. This bridge lives in the host network namespace and is shared across all containers and PODs on the given host for providing network connectivity.

- When a POD is created, the container runtime creates a network namespace for the POD. All the containers of the POD will live in this namespace and each POD will have its own namespace.

- The container network plugin creates a logical ‘cable’ between the POD namespace and the container integration bridge. This is known as a veth pair. Note that there is only one veth pair per POD, even with multi-container pods. The container network plugin also allocates an IP address for the POD from the POD network CIDR.

- Traffic between PODs on the same host traverses the local container integration bridge and does not leave the host.

- Traffic destined for PODs on other hosts are forwarded to the container overlay network. The container network logically spans all hosts in the cluster, i.e. it provides a common layer 3 network for connecting all PODs in the cluster.

- The container overlay network encapsulates POD traffic and forwards it to the host network. The host network ensures the traffic ends up on the host containing the target POD and the reverse of the steps above are applied.

- Whether the cluster hosts are VMs or bare-metal systems there will inevitably be an infrastructure below these hosts. It is not always possible to gain access to this infrastructure. However, this infrastructure can be a considerable source of network issues so it is important to remember that it exists.

- Traffic between PODs on different hosts always traverse the container overlay network, the host network, and the infrastructure network.

Iptables vs IPVS vs BPF vs eBPF

refer

IPVS vs. IPTABLES

IPVS mode was introduced in Kubernetes v1.8, goes beta in v1.9 and GA in v1.11. IPTABLES mode was added in v1.1 and become the default operating mode since v1.2. Both IPVS and IPTABLES are based on netfilter. Differences between IPVS mode and IPTABLES mode are as follows:

- IPVS provides better scalability and performance for large clusters.

- IPVS supports more sophisticated load balancing algorithms than IPTABLES (least load, least connections, locality, weighted, etc.).

- IPVS supports server health checking and connection retries, etc.

nat rules

sudo iptables -nvL -t nat

flat

Pods on a node can communicate with all pods on all nodes without NAT

▪ Agents on a node (e.g. system daemons, kubelet) can communicate with all pods on that node

▪ Pods in the host network of a node can communicate with all pods on all nodes without NAT

bridge

▪ Docker’s docker0 bridge is an example of Linux bridge

▪ Kubelet can create and manage bridges in kubenet mode (cbr0)

docker0

network policy

command

iptables -t nat -L KUBE-SERVICES

conntrack -L/-E